A Predictive Data Analysis is a type of data-analysis where after the complete statistical study of the data, the model predicts some estimate when it receives a new datum. It can be for various types of predictions categorization.

- Some other types of data-analysis can be Descriptive, Diagnostic or Prescriptive Analysis.

This Data Mining Project involves a sequence of (six) steps i.e.

a) Business Understanding

b) Data Understanding

c) Data Preparation

d) Modelling

e) Evaluation

f) Deployment

Main Data-Mining Concepts Used

In this project, I will be using the time series dataset(s) that will consist of the data values of different locations, worldwide.

DATA PREPROCESSIG & POSTPROCESSING

- Anomaly/Outliers - identification, verification & removal

- Unit Scaling for Normalization

- Identification of the type of distribution

- Data Reduction

- Exploratory Data Analysis (EDA) - using boxplots & scatter-plots

MODELLING

- Regression

- Model overfitting

- SVMK, kNN, Linear & Polynomial Regression Algorithms

Table of Contents

1. Introduction

2. Business Understanding

3. Data Understanding

4. Data Preparation

5. Modelling

6. Evaluation

7. Deployment

8. Conclusion

9. Bibliography

The Problem Statement…

History of Pandemics:

Great Plague of Marseille: 1720

As per the records Great Plague of Marseille started when a ship called Grand-Saint-Antoine docked in Marseille, France, carrying a cargo of goods from the eastern Mediterranean.

It continued for the next three years, killing up to 30% of the population of Marseille.

First Cholera Pandemic: 1820

In 1820 the First Cholera Pandemic occurred, in Asia, affecting countries, mainly Indonesia, Thailand and the Philippines. This pandemic has also killed about 100,000 as declared officially. It was a bacterial infection caused due to the contaminated water.

Spanish Influenza/Flu: 1920

Spanish Flu was the most recent and the most unrelenting pandemics. It has infected about half a billion people and killed 100 million. The Spanish flu holds the official record for the deadliest pandemic officially recorded in history.

Story of its origin -

During November, 2019 - a severe viral infection was noticed in Wuhan, a city in Hubei provinces of China. On November 17th 2019, the first case of this infection was reported. Doctor’s initially took it lightly as if it was a normal fever/cold. But, when a wide range of patients reported similar kind of symptoms, a doctor - Dr. Li Wenliang of Chinese Academy of Sciences (CAS) Lab, claimed that it was a type of severe acute respiratory syndrome, spreading through a new coronavirus transmission in the whole Hubei province, very rapidly. Reportedly, many attempts were made not to leak this, anywhere. But anyhow, this news got exposed during the late December, 2019. In January, Dr. Li himself died suffering with the same deadly viral infection.

As per the sources, in 2003, the same lab found first deadly SARS Coronavirus, leading to 813 casualties, all over the world, within two months!

Initially, it was named as “Wuhan Virus”. But, as it started spreading rapidly from Hubei to the whole mainland of China, its name got replaced by term “China Virus”.

Finally, on Feb. 11th 2020, the World Health Organization (WHO) gave the disease an official name: COVID-19.

WHO has also declared the COVID-19 as a pandemic.

Since the outbreak of COVID-19, over 350,000 people have been infected throughout the world and over 15,000 people have been killed.

Map GIF

COVID-19: Outbreak in world -

A grand Cruise Ship called Diamond Princess was all set for it’s two weeks’ journey from Yokohama (Japan) to China, Vietnam, Taiwan and back to Japan. Guests boarded it on January 20th, but as the journey was about to end, on February 1st tested positive for coronavirus, who disembarked in Hong Kong on January 25th.

The cruise was cancelled from sailing as Japanese health instructors asked to check all the passengers, along with the crew.

And since then, the number of confirmed cases for COVID-19 kept increasing. Currently, the ship was carrying over 3500 people.

As per the data available, following graphs show the 2019-nCoV cases have increased since Jan. 22nd:

- Confirmed Cases:

- Death Cases:

About the Disease:

The COVID-19 is spread as rapidly as it has the Reproduction Number (R-naught) 2.5.

Reproduction Number

Reproduction Number: is meant for the number of average persons, whom an infected person is further infecting. In more generalized language, on an average, from every COVID-19 patient, virus are getting transmitted to at least 2.5 fit persons.

For Ebola, this number is appx. 1.7

In order to have control on any epidemic, the reproducibility score has to be decreased as much as possible.

That’s why, most of the highly countries are locked down. In many of them, there are the shortage of hospitals/beds/doctors, too.

Due to the lockdown, the market is also going down.

Symptoms:

The severe acute respiratory syndrome COVID-19 (SARS-CoV-19), which is often termed as 2019-nCoV, as well, is basically a viral disease spread by the novel coronavirus in which the virus tend to attack onto the respiratory system of the patient.

Though the studies are still going on, following are some most common symptoms of COVID-19, known so far:

- Fever

- Dry Cough

- Fatigue

- Shortness of Breath

- Nasal Congestion etc….

These are the symptoms that are reported by most of the patients. Apart from these, few patients also found to develop aches and pains and get the sniffles, according to data from China.

Business Understanding:

Now we all are well aware that this is a very severe pandemic i.e. the pandemic of 21st century. Not only this but another major problem is that it has been around 5 months, since when the 2019-nCoV appeared, first.

Scientists are still trying to create the vaccine as soon as possible, but for now, no final cure is up.

What’s the significance of this analysis?

Due to this pandemic:

- Almost every country in the world has at least one confirm case of COVID-19

- The diseases is spreading very rapidly

- Economy of various countries is going down

- Casualties are increasing even more, etc….

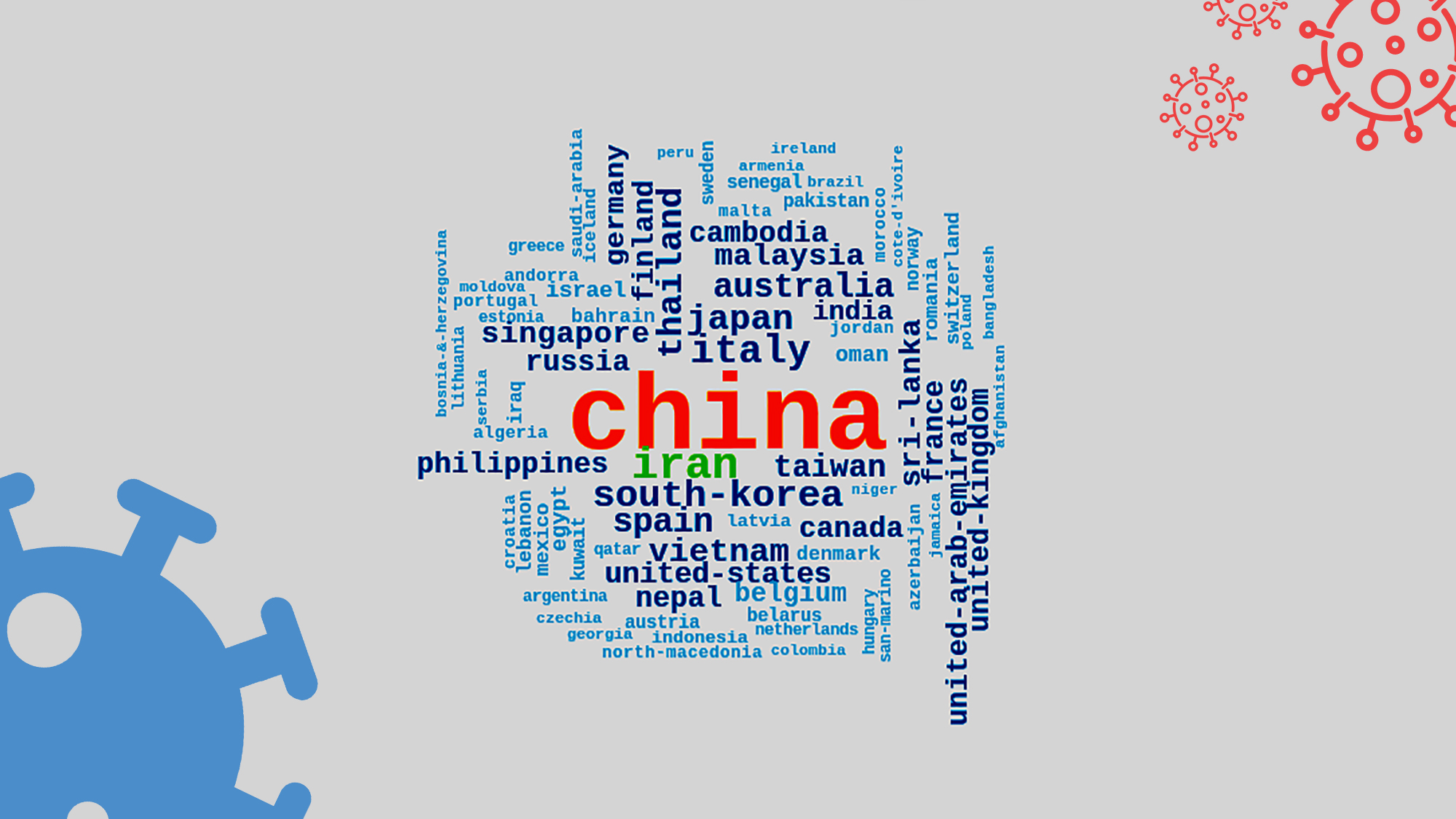

China, being the origin of the COVID-19, is the most affected country, in the world.

* This becomes more clear from the following word-clouds

(total confirmed cases & deaths due to coronavirus till 21/03/2020)

Finds an estimate about the rate of increase/decrease in appearance of new cases for next one week, in case of China.

- This estimate can help the country to know how much new beds/hospitals are going to be required within next few days.

- Helpful for the country in terms of the financial planning, as the economy is also going down.

- Directs where the focus has to be driven first.

- It would convey a more precise information for deciding that how the money has to be divided for the constructions of new hospitals/isolation centres, COVID-19 test kits, other medical equipments, caring and treatments, etc….

This all would be helpful for other countries as well, in order to bring the Reproduction Number (R-naught) down to an acceptable limit. (by applying useful strategies of China)

* We’ll be preparing separate datasets for generating few of these visualizations, while data-preparation phase.

* For understanding how these visuals are developed from scratch, please refer to map.R and visualizer.R.

Data Understanding:

* Data & Problem Statement

Our aim is to analyse that what would be the status of the epidemic will change, within a week and hence, how log it might take to China to have its control over this infection

Further, we’d aim to generalize it for any of the country, so that we could find the status of the same, whenever required. Obviously, we’d have to change few parameters, tho’ we won’t have to waste our time again and again in data preparation for a particular country.

Hence, we need the data about:

- Confirmed cases of COVID-19, throughout the world

- Death cases due to COVID-19, throughout the world

- Total Recovery from COVID-19, throughout the world

We’ve collected this data from various sources including Johns Hopkins University, WHO & MoHFW - India.

Beginning with the coding section:

Let’s start with setting-up our working directory and load all the required packages, that we would be required:

Initial Project Setup

##### Loading LIBRARIES #####

library(stringr)

library(ggplot2)

library(AUCRF)

library(randomForest)

library(RFmarkerDetector)

library(caret)

library(mlbench)

library(kernlab)* Data Collection

Basically, we have collected the raw data from websites and GitHub repository.

# loading raw data

check.Confirmed = read.csv("static/raw/time_series_19-covid-Confirmed.csv")

check.Deaths = read.csv("static/raw/time_series_19-covid-Deaths.csv")

check.Recovered = read.csv("static/raw/time_series_19-covid-Recovered.csv")The data, that we have collected is in the CSV (i.e. “comma-separated values”) format.

A CSV file is used to store the structured data row-wise, where the data elements in each row is separated by a comma (,).

It’s pretty similar to the following:

Belinda Jameson,2017,Cushing House,148,3.52

Jeff Smith,2018,Prescott House,17-D,3.20

Let’s view the head portion of our raw data:

## Province.State Country.Region Lat Long X1.22.20 X1.23.20

## 1 Thailand 15.0000 101.0000 2 3

## 2 Japan 36.0000 138.0000 2 1

## 3 Singapore 1.2833 103.8333 0 1

## 4 Nepal 28.1667 84.2500 0 0

## 5 Malaysia 2.5000 112.5000 0 0

## 6 British Columbia Canada 49.2827 -123.1207 0 0

## X1.24.20 X1.25.20 X1.26.20 X1.27.20 X1.28.20 X1.29.20 X1.30.20 X1.31.20

## 1 5 7 8 8 14 14 14 19

## 2 2 2 4 4 7 7 11 15

## 3 3 3 4 5 7 7 10 13

## 4 0 1 1 1 1 1 1 1

## 5 0 3 4 4 4 7 8 8

## 6 0 0 0 0 1 1 1 1

## X2.1.20 X2.2.20 X2.3.20 X2.4.20 X2.5.20 X2.6.20 X2.7.20 X2.8.20 X2.9.20

## 1 19 19 19 25 25 25 25 32 32

## 2 20 20 20 22 22 45 25 25 26

## 3 16 18 18 24 28 28 30 33 40

## 4 1 1 1 1 1 1 1 1 1

## 5 8 8 8 10 12 12 12 16 16

## 6 1 1 1 1 2 2 4 4 4

## X2.10.20 X2.11.20 X2.12.20 X2.13.20 X2.14.20 X2.15.20 X2.16.20 X2.17.20

## 1 32 33 33 33 33 33 34 35

## 2 26 26 28 28 29 43 59 66

## 3 45 47 50 58 67 72 75 77

## 4 1 1 1 1 1 1 1 1

## 5 18 18 18 19 19 22 22 22

## 6 4 4 4 4 4 4 4 5

## X2.18.20 X2.19.20 X2.20.20 X2.21.20 X2.22.20 X2.23.20 X2.24.20 X2.25.20

## 1 35 35 35 35 35 35 35 37

## 2 74 84 94 105 122 147 159 170

## 3 81 84 84 85 85 89 89 91

## 4 1 1 1 1 1 1 1 1

## 5 22 22 22 22 22 22 22 22

## 6 5 5 5 6 6 6 6 7

## X2.26.20 X2.27.20 X2.28.20 X2.29.20 X3.1.20 X3.2.20 X3.3.20 X3.4.20

## 1 40 40 41 42 42 43 43 43

## 2 189 214 228 241 256 274 293 331

## 3 93 93 93 102 106 108 110 110

## 4 1 1 1 1 1 1 1 1

## 5 22 23 23 25 29 29 36 50

## 6 7 7 7 8 8 8 9 12

## X3.5.20 X3.6.20 X3.7.20 X3.8.20 X3.9.20 X3.10.20 X3.11.20 X3.12.20

## 1 47 48 50 50 50 53 59 70

## 2 360 420 461 502 511 581 639 639

## 3 117 130 138 150 150 160 178 178

## 4 1 1 1 1 1 1 1 1

## 5 50 83 93 99 117 129 149 149

## 6 13 21 21 27 32 32 39 46

## X3.13.20 X3.14.20 X3.15.20 X3.16.20 X3.17.20 X3.18.20 X3.19.20

## 1 75 82 114 147 177 212 272

## 2 701 773 839 825 878 889 924

## 3 200 212 226 243 266 313 345

## 4 1 1 1 1 1 1 1

## 5 197 238 428 566 673 790 900

## 6 64 64 73 103 103 186 231## Province.State Country.Region Lat Long X1.22.20 X1.23.20

## 1 Thailand 15.0000 101.0000 0 0

## 2 Japan 36.0000 138.0000 0 0

## 3 Singapore 1.2833 103.8333 0 0

## 4 Nepal 28.1667 84.2500 0 0

## 5 Malaysia 2.5000 112.5000 0 0

## 6 British Columbia Canada 49.2827 -123.1207 0 0

## X1.24.20 X1.25.20 X1.26.20 X1.27.20 X1.28.20 X1.29.20 X1.30.20 X1.31.20

## 1 0 0 0 0 0 0 0 0

## 2 0 0 0 0 0 0 0 0

## 3 0 0 0 0 0 0 0 0

## 4 0 0 0 0 0 0 0 0

## 5 0 0 0 0 0 0 0 0

## 6 0 0 0 0 0 0 0 0

## X2.1.20 X2.2.20 X2.3.20 X2.4.20 X2.5.20 X2.6.20 X2.7.20 X2.8.20 X2.9.20

## 1 0 0 0 0 0 0 0 0 0

## 2 0 0 0 0 0 0 0 0 0

## 3 0 0 0 0 0 0 0 0 0

## 4 0 0 0 0 0 0 0 0 0

## 5 0 0 0 0 0 0 0 0 0

## 6 0 0 0 0 0 0 0 0 0

## X2.10.20 X2.11.20 X2.12.20 X2.13.20 X2.14.20 X2.15.20 X2.16.20 X2.17.20

## 1 0 0 0 0 0 0 0 0

## 2 0 0 0 1 1 1 1 1

## 3 0 0 0 0 0 0 0 0

## 4 0 0 0 0 0 0 0 0

## 5 0 0 0 0 0 0 0 0

## 6 0 0 0 0 0 0 0 0

## X2.18.20 X2.19.20 X2.20.20 X2.21.20 X2.22.20 X2.23.20 X2.24.20 X2.25.20

## 1 0 0 0 0 0 0 0 0

## 2 1 1 1 1 1 1 1 1

## 3 0 0 0 0 0 0 0 0

## 4 0 0 0 0 0 0 0 0

## 5 0 0 0 0 0 0 0 0

## 6 0 0 0 0 0 0 0 0

## X2.26.20 X2.27.20 X2.28.20 X2.29.20 X3.1.20 X3.2.20 X3.3.20 X3.4.20

## 1 0 0 0 0 1 1 1 1

## 2 2 4 4 5 6 6 6 6

## 3 0 0 0 0 0 0 0 0

## 4 0 0 0 0 0 0 0 0

## 5 0 0 0 0 0 0 0 0

## 6 0 0 0 0 0 0 0 0

## X3.5.20 X3.6.20 X3.7.20 X3.8.20 X3.9.20 X3.10.20 X3.11.20 X3.12.20

## 1 1 1 1 1 1 1 1 1

## 2 6 6 6 6 10 10 15 16

## 3 0 0 0 0 0 0 0 0

## 4 0 0 0 0 0 0 0 0

## 5 0 0 0 0 0 0 0 0

## 6 0 0 0 0 1 1 1 1

## X3.13.20 X3.14.20 X3.15.20 X3.16.20 X3.17.20 X3.18.20 X3.19.20

## 1 1 1 1 1 1 1 1

## 2 19 22 22 27 29 29 29

## 3 0 0 0 0 0 0 0

## 4 0 0 0 0 0 0 0

## 5 0 0 0 0 2 2 2

## 6 1 1 1 4 4 7 7## Province.State Country.Region Lat Long X1.22.20 X1.23.20

## 1 Thailand 15.0000 101.0000 0 0

## 2 Japan 36.0000 138.0000 0 0

## 3 Singapore 1.2833 103.8333 0 0

## 4 Nepal 28.1667 84.2500 0 0

## 5 Malaysia 2.5000 112.5000 0 0

## 6 British Columbia Canada 49.2827 -123.1207 0 0

## X1.24.20 X1.25.20 X1.26.20 X1.27.20 X1.28.20 X1.29.20 X1.30.20 X1.31.20

## 1 0 0 2 2 5 5 5 5

## 2 0 0 1 1 1 1 1 1

## 3 0 0 0 0 0 0 0 0

## 4 0 0 0 0 0 0 0 0

## 5 0 0 0 0 0 0 0 0

## 6 0 0 0 0 0 0 0 0

## X2.1.20 X2.2.20 X2.3.20 X2.4.20 X2.5.20 X2.6.20 X2.7.20 X2.8.20 X2.9.20

## 1 5 5 5 5 5 5 5 10 10

## 2 1 1 1 1 1 1 1 1 1

## 3 0 0 0 0 0 0 0 2 2

## 4 0 0 0 0 0 0 0 0 0

## 5 0 0 0 0 0 0 1 1 1

## 6 0 0 0 0 0 0 0 0 0

## X2.10.20 X2.11.20 X2.12.20 X2.13.20 X2.14.20 X2.15.20 X2.16.20 X2.17.20

## 1 10 10 10 12 12 12 14 15

## 2 4 9 9 9 9 12 12 12

## 3 2 9 15 15 17 18 18 24

## 4 0 0 1 1 1 1 1 1

## 5 1 3 3 3 3 7 7 7

## 6 0 0 0 0 0 0 0 0

## X2.18.20 X2.19.20 X2.20.20 X2.21.20 X2.22.20 X2.23.20 X2.24.20 X2.25.20

## 1 15 15 15 17 17 21 21 22

## 2 13 18 18 22 22 22 22 22

## 3 29 34 34 37 37 51 51 53

## 4 1 1 1 1 1 1 1 1

## 5 13 15 15 15 15 15 18 18

## 6 0 0 0 0 0 0 0 0

## X2.26.20 X2.27.20 X2.28.20 X2.29.20 X3.1.20 X3.2.20 X3.3.20 X3.4.20

## 1 22 22 28 28 28 31 31 31

## 2 22 22 22 32 32 32 43 43

## 3 62 62 62 72 72 78 78 78

## 4 1 1 1 1 1 1 1 1

## 5 18 18 18 18 18 18 22 22

## 6 0 3 3 3 3 3 3 3

## X3.5.20 X3.6.20 X3.7.20 X3.8.20 X3.9.20 X3.10.20 X3.11.20 X3.12.20

## 1 31 31 31 31 31 33 34 34

## 2 43 46 76 76 76 101 118 118

## 3 78 78 78 78 78 78 96 96

## 4 1 1 1 1 1 1 1 1

## 5 22 22 23 24 24 24 26 26

## 6 3 3 4 4 4 4 4 4

## X3.13.20 X3.14.20 X3.15.20 X3.16.20 X3.17.20 X3.18.20 X3.19.20

## 1 35 35 35 35 41 42 42

## 2 118 118 118 144 144 144 150

## 3 97 105 105 109 114 114 114

## 4 1 1 1 1 1 1 1

## 5 26 35 42 42 49 60 75

## 6 4 4 4 4 4 4 4We can see that type of collected data is: time-series

A Time series data of a variable have a set of observations on values at different points of time. They are usually collected at fixed intervals, such as daily, weekly, monthly, annually, quarterly, etc.

We observe that all the three datasets have same columns as well as the same type of data.

## Number of columns in all 3 datasets:-matrix(

c("Confirmed", "Deaths", "Recovered", ncol(check.Confirmed), ncol(check.Deaths), ncol(check.Recovered)),

nrow = 2, ncol = 3, byrow = T

)## [,1] [,2] [,3]

## [1,] "Confirmed" "Deaths" "Recovered"

## [2,] "62" "62" "62"## Dimentions of datasets:-## [1] 468 62######################

# columns' name

#cat("Name of columns in all 3 datasets:-\n")

#colnames(check.Confirmed)

#colnames(check.Deaths)

#colnames(check.Recovered)

Let’s see the structure of these datasets:

## 'data.frame': 468 obs. of 62 variables:

## $ Province.State: Factor w/ 321 levels "","Adams, IN",..: 1 1 1 1 1 25 196 298 237 1 ...

## $ Country.Region: Factor w/ 155 levels "Afghanistan",..: 142 76 129 103 90 27 8 8 8 25 ...

## $ Lat : num 15 36 1.28 28.17 2.5 ...

## $ Long : num 101 138 103.8 84.2 112.5 ...

## $ X1.22.20 : int 2 2 0 0 0 0 0 0 0 0 ...

## $ X1.23.20 : int 3 1 1 0 0 0 0 0 0 0 ...

## $ X1.24.20 : int 5 2 3 0 0 0 0 0 0 0 ...

## $ X1.25.20 : int 7 2 3 1 3 0 0 0 0 0 ...

## $ X1.26.20 : int 8 4 4 1 4 0 3 1 0 0 ...

## $ X1.27.20 : int 8 4 5 1 4 0 4 1 0 1 ...

## $ X1.28.20 : int 14 7 7 1 4 1 4 1 0 1 ...

## $ X1.29.20 : int 14 7 7 1 7 1 4 1 1 1 ...

## $ X1.30.20 : int 14 11 10 1 8 1 4 2 3 1 ...

## $ X1.31.20 : int 19 15 13 1 8 1 4 3 2 1 ...

## $ X2.1.20 : int 19 20 16 1 8 1 4 4 3 1 ...

## $ X2.2.20 : int 19 20 18 1 8 1 4 4 2 1 ...

## $ X2.3.20 : int 19 20 18 1 8 1 4 4 2 1 ...

## $ X2.4.20 : int 25 22 24 1 10 1 4 4 3 1 ...

## $ X2.5.20 : int 25 22 28 1 12 2 4 4 3 1 ...

## $ X2.6.20 : int 25 45 28 1 12 2 4 4 4 1 ...

## $ X2.7.20 : int 25 25 30 1 12 4 4 4 5 1 ...

## $ X2.8.20 : int 32 25 33 1 16 4 4 4 5 1 ...

## $ X2.9.20 : int 32 26 40 1 16 4 4 4 5 1 ...

## $ X2.10.20 : int 32 26 45 1 18 4 4 4 5 1 ...

## $ X2.11.20 : int 33 26 47 1 18 4 4 4 5 1 ...

## $ X2.12.20 : int 33 28 50 1 18 4 4 4 5 1 ...

## $ X2.13.20 : int 33 28 58 1 19 4 4 4 5 1 ...

## $ X2.14.20 : int 33 29 67 1 19 4 4 4 5 1 ...

## $ X2.15.20 : int 33 43 72 1 22 4 4 4 5 1 ...

## $ X2.16.20 : int 34 59 75 1 22 4 4 4 5 1 ...

## $ X2.17.20 : int 35 66 77 1 22 5 4 4 5 1 ...

## $ X2.18.20 : int 35 74 81 1 22 5 4 4 5 1 ...

## $ X2.19.20 : int 35 84 84 1 22 5 4 4 5 1 ...

## $ X2.20.20 : int 35 94 84 1 22 5 4 4 5 1 ...

## $ X2.21.20 : int 35 105 85 1 22 6 4 4 5 1 ...

## $ X2.22.20 : int 35 122 85 1 22 6 4 4 5 1 ...

## $ X2.23.20 : int 35 147 89 1 22 6 4 4 5 1 ...

## $ X2.24.20 : int 35 159 89 1 22 6 4 4 5 1 ...

## $ X2.25.20 : int 37 170 91 1 22 7 4 4 5 1 ...

## $ X2.26.20 : int 40 189 93 1 22 7 4 4 5 1 ...

## $ X2.27.20 : int 40 214 93 1 23 7 4 4 5 1 ...

## $ X2.28.20 : int 41 228 93 1 23 7 4 4 5 1 ...

## $ X2.29.20 : int 42 241 102 1 25 8 4 7 9 1 ...

## $ X3.1.20 : int 42 256 106 1 29 8 6 7 9 1 ...

## $ X3.2.20 : int 43 274 108 1 29 8 6 9 9 1 ...

## $ X3.3.20 : int 43 293 110 1 36 9 13 9 11 1 ...

## $ X3.4.20 : int 43 331 110 1 50 12 22 10 11 1 ...

## $ X3.5.20 : int 47 360 117 1 50 13 22 10 13 1 ...

## $ X3.6.20 : int 48 420 130 1 83 21 26 10 13 1 ...

## $ X3.7.20 : int 50 461 138 1 93 21 28 11 13 1 ...

## $ X3.8.20 : int 50 502 150 1 99 27 38 11 15 2 ...

## $ X3.9.20 : int 50 511 150 1 117 32 48 15 15 2 ...

## $ X3.10.20 : int 53 581 160 1 129 32 55 18 18 2 ...

## $ X3.11.20 : int 59 639 178 1 149 39 65 21 20 3 ...

## $ X3.12.20 : int 70 639 178 1 149 46 65 21 20 3 ...

## $ X3.13.20 : int 75 701 200 1 197 64 92 36 35 5 ...

## $ X3.14.20 : int 82 773 212 1 238 64 112 49 46 7 ...

## $ X3.15.20 : int 114 839 226 1 428 73 134 57 61 7 ...

## $ X3.16.20 : int 147 825 243 1 566 103 171 71 68 7 ...

## $ X3.17.20 : int 177 878 266 1 673 103 210 94 78 33 ...

## $ X3.18.20 : int 212 889 313 1 790 186 267 121 94 35 ...

## $ X3.19.20 : int 272 924 345 1 900 231 307 121 144 37 ...Explanation of each columns in fetched data:

- There are 3 dedicated databases for data about Confirmed/Death/Recovery cases, all around the world

Province.State:

- Data-type: factor they can be specific, unique and valid names

- Holds name of City/Province/State, where the data is coming from

- Eg.: Hubei

Country.Region:

- Data-type: factor they can be specific, unique and valid names

- Holds name of the country, in which the reported area comes

- Eg.: China (Hubei is a Province of China)

Lat:

- Data-type: numeric (i.e. can have values in decimals, too)

- Holds the Latitude position of the given place(as in col1)

- Eg.: Latitude position of Hubei = 30.9756

Long:

- Data-type: numeric (i.e. can have values in decimals, too)

- Holds the longitude position of the given place(as in col1)

- Eg.: Longitude position of Hubei = 112.2707

Col. 5 to 62:

- Data-type: integer (i.e. discrete) and remains always positive as it determines the no, of individuals

- It’s a time series data where the data is collected at various interval of time

- Each datum value is represented, based on the different days in series (from 22/01/2020)

- The constant entity is the location, whose data is represented in every row

## A sample data of a location from "Confirmed Cases'" dataset:## Province.State Country.Region Lat Long X1.22.20 X1.23.20

## 155 Hubei China 30.9756 112.2707 444 444

## X1.24.20 X1.25.20 X1.26.20 X1.27.20 X1.28.20 X1.29.20 X1.30.20

## 155 549 761 1058 1423 3554 3554 4903

## X1.31.20 X2.1.20 X2.2.20 X2.3.20 X2.4.20 X2.5.20 X2.6.20 X2.7.20

## 155 5806 7153 11177 13522 16678 19665 22112 24953

## X2.8.20 X2.9.20 X2.10.20 X2.11.20 X2.12.20 X2.13.20 X2.14.20 X2.15.20

## 155 27100 29631 31728 33366 33366 48206 54406 56249

## X2.16.20 X2.17.20 X2.18.20 X2.19.20 X2.20.20 X2.21.20 X2.22.20

## 155 58182 59989 61682 62031 62442 62662 64084

## X2.23.20 X2.24.20 X2.25.20 X2.26.20 X2.27.20 X2.28.20 X2.29.20 X3.1.20

## 155 64084 64287 64786 65187 65596 65914 66337 66907

## X3.2.20 X3.3.20 X3.4.20 X3.5.20 X3.6.20 X3.7.20 X3.8.20 X3.9.20

## 155 67103 67217 67332 67466 67592 67666 67707 67743

## X3.10.20 X3.11.20 X3.12.20 X3.13.20 X3.14.20 X3.15.20 X3.16.20

## 155 67760 67773 67781 67786 67790 67794 67798

## X3.17.20 X3.18.20 X3.19.20

## 155 67799 67800 67800Data Preparation:

Now, as we have the raw data for our analysis, we can move forward for our next phase i.e. Data-Preparation.

* The data-preparation is considered to be the most time-consuming phase of any data science project.

* On an average, an ideal data-science project’s 90% of time is spent during Data-Collection and Data-Preparation.

* Data Cleaning

Whenever we collect any kind of the raw-data from various sources, it has a lot of the vulnerabilities.

Most often, these are of following types:

- NAs and NaNs

- Missing data values

- Incorrect data values

Checking for these flaws in our data:

# sample NA values

check.Confirmed[which(str_detect(check.Confirmed$Country.Region, "Cruise Ship")),]## Province.State Country.Region Lat Long X1.22.20 X1.23.20

## 166 Diamond Princess Cruise Ship 35.4437 139.638 NA NA

## X1.24.20 X1.25.20 X1.26.20 X1.27.20 X1.28.20 X1.29.20 X1.30.20

## 166 NA NA NA NA NA NA NA

## X1.31.20 X2.1.20 X2.2.20 X2.3.20 X2.4.20 X2.5.20 X2.6.20 X2.7.20

## 166 NA NA NA NA NA NA NA 61

## X2.8.20 X2.9.20 X2.10.20 X2.11.20 X2.12.20 X2.13.20 X2.14.20 X2.15.20

## 166 61 64 135 135 175 175 218 285

## X2.16.20 X2.17.20 X2.18.20 X2.19.20 X2.20.20 X2.21.20 X2.22.20

## 166 355 454 542 621 634 634 634

## X2.23.20 X2.24.20 X2.25.20 X2.26.20 X2.27.20 X2.28.20 X2.29.20 X3.1.20

## 166 691 691 691 705 705 705 705 705

## X3.2.20 X3.3.20 X3.4.20 X3.5.20 X3.6.20 X3.7.20 X3.8.20 X3.9.20

## 166 705 706 706 706 696 696 696 696

## X3.10.20 X3.11.20 X3.12.20 X3.13.20 X3.14.20 X3.15.20 X3.16.20

## 166 696 696 696 696 696 696 696

## X3.17.20 X3.18.20 X3.19.20

## 166 696 712 712# sample wrong data "french guiana" the data value can not decrease on next day

check.Confirmed[which(str_detect(check.Confirmed$Province.State, "French Guiana")),]## Province.State Country.Region Lat Long X1.22.20 X1.23.20

## 431 French Guiana France 3.9339 -53.1258 0 0

## X1.24.20 X1.25.20 X1.26.20 X1.27.20 X1.28.20 X1.29.20 X1.30.20

## 431 0 0 0 0 0 0 0

## X1.31.20 X2.1.20 X2.2.20 X2.3.20 X2.4.20 X2.5.20 X2.6.20 X2.7.20

## 431 0 0 0 0 0 0 0 0

## X2.8.20 X2.9.20 X2.10.20 X2.11.20 X2.12.20 X2.13.20 X2.14.20 X2.15.20

## 431 0 0 0 0 0 0 0 0

## X2.16.20 X2.17.20 X2.18.20 X2.19.20 X2.20.20 X2.21.20 X2.22.20

## 431 0 0 0 0 0 0 0

## X2.23.20 X2.24.20 X2.25.20 X2.26.20 X2.27.20 X2.28.20 X2.29.20 X3.1.20

## 431 0 0 0 0 0 0 0 0

## X3.2.20 X3.3.20 X3.4.20 X3.5.20 X3.6.20 X3.7.20 X3.8.20 X3.9.20

## 431 0 0 0 0 0 5 5 5

## X3.10.20 X3.11.20 X3.12.20 X3.13.20 X3.14.20 X3.15.20 X3.16.20

## 431 5 3 5 3 5 7 11

## X3.17.20 X3.18.20 X3.19.20

## 431 11 11 11## Province.State Country.Region Lat Long X1.22.20 X1.23.20

## 1 Thailand 15.0000 101.0000 0 0

## 2 Japan 36.0000 138.0000 0 0

## 3 Singapore 1.2833 103.8333 0 0

## 4 Nepal 28.1667 84.2500 0 0

## 5 Malaysia 2.5000 112.5000 0 0

## 6 British Columbia Canada 49.2827 -123.1207 0 0

## X1.24.20 X1.25.20 X1.26.20 X1.27.20 X1.28.20 X1.29.20 X1.30.20 X1.31.20

## 1 0 0 2 2 5 5 5 5

## 2 0 0 1 1 1 1 1 1

## 3 0 0 0 0 0 0 0 0

## 4 0 0 0 0 0 0 0 0

## 5 0 0 0 0 0 0 0 0

## 6 0 0 0 0 0 0 0 0

## X2.1.20 X2.2.20 X2.3.20 X2.4.20 X2.5.20 X2.6.20 X2.7.20 X2.8.20 X2.9.20

## 1 5 5 5 5 5 5 5 10 10

## 2 1 1 1 1 1 1 1 1 1

## 3 0 0 0 0 0 0 0 2 2

## 4 0 0 0 0 0 0 0 0 0

## 5 0 0 0 0 0 0 1 1 1

## 6 0 0 0 0 0 0 0 0 0

## X2.10.20 X2.11.20 X2.12.20 X2.13.20 X2.14.20 X2.15.20 X2.16.20 X2.17.20

## 1 10 10 10 12 12 12 14 15

## 2 4 9 9 9 9 12 12 12

## 3 2 9 15 15 17 18 18 24

## 4 0 0 1 1 1 1 1 1

## 5 1 3 3 3 3 7 7 7

## 6 0 0 0 0 0 0 0 0

## X2.18.20 X2.19.20 X2.20.20 X2.21.20 X2.22.20 X2.23.20 X2.24.20 X2.25.20

## 1 15 15 15 17 17 21 21 22

## 2 13 18 18 22 22 22 22 22

## 3 29 34 34 37 37 51 51 53

## 4 1 1 1 1 1 1 1 1

## 5 13 15 15 15 15 15 18 18

## 6 0 0 0 0 0 0 0 0

## X2.26.20 X2.27.20 X2.28.20 X2.29.20 X3.1.20 X3.2.20 X3.3.20 X3.4.20

## 1 22 22 28 28 28 31 31 31

## 2 22 22 22 32 32 32 43 43

## 3 62 62 62 72 72 78 78 78

## 4 1 1 1 1 1 1 1 1

## 5 18 18 18 18 18 18 22 22

## 6 0 3 3 3 3 3 3 3

## X3.5.20 X3.6.20 X3.7.20 X3.8.20 X3.9.20 X3.10.20 X3.11.20 X3.12.20

## 1 31 31 31 31 31 33 34 34

## 2 43 46 76 76 76 101 118 118

## 3 78 78 78 78 78 78 96 96

## 4 1 1 1 1 1 1 1 1

## 5 22 22 23 24 24 24 26 26

## 6 3 3 4 4 4 4 4 4

## X3.13.20 X3.14.20 X3.15.20 X3.16.20 X3.17.20 X3.18.20 X3.19.20

## 1 35 35 35 35 41 42 42

## 2 118 118 118 144 144 144 150

## 3 97 105 105 109 114 114 114

## 4 1 1 1 1 1 1 1

## 5 26 35 42 42 49 60 75

## 6 4 4 4 4 4 4 4

So, there are also many issues (like blanks in the place of states’ name and data of a Cruise Ship among countries’ data) with our available datasets.

To get rid of these issues, the data-cleaning is performed.

For data cleaning, we consider either of these two methods (or both, too):

- Removal:

Here we usually remove or delete those rows/columns, where we find the vulnerabilities.

These rows/columns might include NAs. - Replacement/Filling:

Here we replace the NAs or incorrect or blanks data values with some acceptable value.

Mostly, values are replaced by Mean or Mode values, so that the overall statistical structure may remain the same.

Sometimes, we also fill them on the basis of some specific calculations.

What will we do?

Because we have the time-series dataset populated with discrete data values, storing the total count of the total people (having COVID-19 confirmed, have died due to COVID-19 or have recovered from COVID-19), the issues:

- can NOT be resolved by MEAN

because in our case, either the Data value can remain CONSTANT or can INCREASE, on every next day.

the MEAN need not be discrete

MEAN can also be less than the previous data, for any particular day etc…- can NOT be resolved by MODE

because it’s a medical data and hence, any most often occurring number cannot be blindly replaced with a missing value etc…

Hence, we’ll NOT be using any of the replacement of MEAN/MODE/MEDIAN

We’ll replace with maximum values

We will be replacing the missing values or NAs with the maximum value up to a day before the current day.

It means that - the values are carried constant for the next day whose data is missing.

# removing NAs, replacing incorrect values

for (i in 1:nrow(check.Confirmed)) {

for (j in 5:ncol(check.Confirmed)) {

if(j==5) {

check.Confirmed[i,j] = ifelse(is.na(check.Confirmed[i, j]), 0, check.Confirmed[i,j])

} else {

if(is.na(check.Confirmed[i, j])){

check.Confirmed[i,j] = check.Confirmed[i, (j-1)]

} else if(check.Confirmed[i, (j-1)] > check.Confirmed[i, j]){

check.Confirmed[i,j] = check.Confirmed[i, (j-1)]

}

}

}

}

for (i in 1:nrow(check.Deaths)) {

for (j in 5:ncol(check.Deaths)) {

if(j==5) {

check.Deaths[i,j] = ifelse(is.na(check.Deaths[i, j]), 0, check.Deaths[i,j])

} else {

if(is.na(check.Deaths[i, j])){

check.Deaths[i,j] = check.Deaths[i, (j-1)]

} else if(check.Deaths[i, (j-1)] > check.Deaths[i, j]){

check.Deaths[i,j] = check.Deaths[i, (j-1)]

}

}

}

}

for (i in 1:nrow(check.Recovered)) {

for (j in 5:ncol(check.Recovered)) {

if(j==5) {

check.Recovered[i,j] = ifelse(is.na(check.Recovered[i, j]), 0, check.Recovered[i,j])

} else {

if(is.na(check.Recovered[i, j])){

check.Recovered[i,j] = check.Recovered[i, (j-1)]

} else if(check.Recovered[i, (j-1)] > check.Recovered[i, j]){

check.Recovered[i,j] = check.Recovered[i, (j-1)]

}

}

}

}# replace blanks and incorrect country/state names

# replacing in states

states = as.character(check.Confirmed$Province.State)

states.levels = as.character(levels(check.Confirmed$Province.State))

states[states %in% ""] = "Others"

states.levels[states.levels %in% ""] = "Others"

#######

states[states %in% "From Diamond Princess"] = "Diamond Princess"

states.levels = states.levels[!states.levels %in% "From Diamond Princess"]

# replacing in countries

countries = as.character(check.Confirmed$Country.Region)

countries.levels = as.character(levels(check.Confirmed$Country.Region))

countries[countries %in% "US"] = "United States"

countries[countries %in% "UK"] = "United Kingdom"

countries[countries %in% "Taiwan*"] = "Taiwan"

countries[countries %in% "The Bahamas"] = "Bahamas"

countries[countries %in% "Gambia, The"] = "Gambia"

countries[countries %in% "Korea, South"] = "South Korea"

countries[countries %in% c("Congo (Brazzaville)", "Congo (Kinshasa)", "Republic of the Congo")] = "Democratic Republic of the Congo"

###

countries.levels[countries.levels %in% "US"] = "United States"

countries.levels[countries.levels %in% "UK"] = "United Kingdom"

countries.levels[countries.levels %in% "Taiwan*"] = "Taiwan"

countries.levels[countries.levels %in% "The Bahamas"] = "Bahamas"

countries.levels[countries.levels %in% "Gambia, The"] = "Gambia"

countries.levels[countries.levels %in% "Korea, South"] = "South Korea"

countries.levels = countries.levels[!countries.levels %in% c("Congo (Brazzaville)", "Congo (Kinshasa)", "Republic of the Congo")]

countries.levels = c(countries.levels, "Democratic Republic of the Congo")

################################ rectified fectors

states.factor = factor(c(states), levels = c(states.levels))

countries.factor = factor(countries, levels = countries.levels)

## CUZ' INITIAL 4 COLUMNS ARE COMMON IN ALL 3 DATASETS ##

# editing factors in datasets

check.Confirmed = cbind(

Province.State = states.factor,

Country.Region = countries.factor,

check.Confirmed[,3:ncol(check.Confirmed)]

)

check.Deaths = cbind(

Province.State = states.factor,

Country.Region = countries.factor,

check.Deaths[,3:ncol(check.Deaths)]

)

check.Recovered = cbind(

Province.State = states.factor,

Country.Region = countries.factor,

check.Recovered[,3:ncol(check.Recovered)]

)Now our data has been cleaned.

Viewing the cleaned data.

## Province.State Country.Region Lat Long X1.22.20 X1.23.20

## 166 Diamond Princess Cruise Ship 35.4437 139.638 0 0

## X1.24.20 X1.25.20 X1.26.20 X1.27.20 X1.28.20 X1.29.20 X1.30.20

## 166 0 0 0 0 0 0 0

## X1.31.20 X2.1.20 X2.2.20 X2.3.20 X2.4.20 X2.5.20 X2.6.20 X2.7.20

## 166 0 0 0 0 0 0 0 61

## X2.8.20 X2.9.20 X2.10.20 X2.11.20 X2.12.20 X2.13.20 X2.14.20 X2.15.20

## 166 61 64 135 135 175 175 218 285

## X2.16.20 X2.17.20 X2.18.20 X2.19.20 X2.20.20 X2.21.20 X2.22.20

## 166 355 454 542 621 634 634 634

## X2.23.20 X2.24.20 X2.25.20 X2.26.20 X2.27.20 X2.28.20 X2.29.20 X3.1.20

## 166 691 691 691 705 705 705 705 705

## X3.2.20 X3.3.20 X3.4.20 X3.5.20 X3.6.20 X3.7.20 X3.8.20 X3.9.20

## 166 705 706 706 706 706 706 706 706

## X3.10.20 X3.11.20 X3.12.20 X3.13.20 X3.14.20 X3.15.20 X3.16.20

## 166 706 706 706 706 706 706 706

## X3.17.20 X3.18.20 X3.19.20

## 166 706 712 712# sample wrong data "french guiana"

check.Confirmed[which(str_detect(check.Confirmed$Province.State, "French Guiana")),]## Province.State Country.Region Lat Long X1.22.20 X1.23.20

## 431 French Guiana France 3.9339 -53.1258 0 0

## X1.24.20 X1.25.20 X1.26.20 X1.27.20 X1.28.20 X1.29.20 X1.30.20

## 431 0 0 0 0 0 0 0

## X1.31.20 X2.1.20 X2.2.20 X2.3.20 X2.4.20 X2.5.20 X2.6.20 X2.7.20

## 431 0 0 0 0 0 0 0 0

## X2.8.20 X2.9.20 X2.10.20 X2.11.20 X2.12.20 X2.13.20 X2.14.20 X2.15.20

## 431 0 0 0 0 0 0 0 0

## X2.16.20 X2.17.20 X2.18.20 X2.19.20 X2.20.20 X2.21.20 X2.22.20

## 431 0 0 0 0 0 0 0

## X2.23.20 X2.24.20 X2.25.20 X2.26.20 X2.27.20 X2.28.20 X2.29.20 X3.1.20

## 431 0 0 0 0 0 0 0 0

## X3.2.20 X3.3.20 X3.4.20 X3.5.20 X3.6.20 X3.7.20 X3.8.20 X3.9.20

## 431 0 0 0 0 0 5 5 5

## X3.10.20 X3.11.20 X3.12.20 X3.13.20 X3.14.20 X3.15.20 X3.16.20

## 431 5 5 5 5 5 7 11

## X3.17.20 X3.18.20 X3.19.20

## 431 11 11 11## Province.State Country.Region Lat Long X1.22.20 X1.23.20

## 1 Others Thailand 15.0000 101.0000 0 0

## 2 Others Japan 36.0000 138.0000 0 0

## 3 Others Singapore 1.2833 103.8333 0 0

## 4 Others Nepal 28.1667 84.2500 0 0

## 5 Others Malaysia 2.5000 112.5000 0 0

## 6 British Columbia Canada 49.2827 -123.1207 0 0

## X1.24.20 X1.25.20 X1.26.20 X1.27.20 X1.28.20 X1.29.20 X1.30.20 X1.31.20

## 1 0 0 2 2 5 5 5 5

## 2 0 0 1 1 1 1 1 1

## 3 0 0 0 0 0 0 0 0

## 4 0 0 0 0 0 0 0 0

## 5 0 0 0 0 0 0 0 0

## 6 0 0 0 0 0 0 0 0

## X2.1.20 X2.2.20 X2.3.20 X2.4.20 X2.5.20 X2.6.20 X2.7.20 X2.8.20 X2.9.20

## 1 5 5 5 5 5 5 5 10 10

## 2 1 1 1 1 1 1 1 1 1

## 3 0 0 0 0 0 0 0 2 2

## 4 0 0 0 0 0 0 0 0 0

## 5 0 0 0 0 0 0 1 1 1

## 6 0 0 0 0 0 0 0 0 0

## X2.10.20 X2.11.20 X2.12.20 X2.13.20 X2.14.20 X2.15.20 X2.16.20 X2.17.20

## 1 10 10 10 12 12 12 14 15

## 2 4 9 9 9 9 12 12 12

## 3 2 9 15 15 17 18 18 24

## 4 0 0 1 1 1 1 1 1

## 5 1 3 3 3 3 7 7 7

## 6 0 0 0 0 0 0 0 0

## X2.18.20 X2.19.20 X2.20.20 X2.21.20 X2.22.20 X2.23.20 X2.24.20 X2.25.20

## 1 15 15 15 17 17 21 21 22

## 2 13 18 18 22 22 22 22 22

## 3 29 34 34 37 37 51 51 53

## 4 1 1 1 1 1 1 1 1

## 5 13 15 15 15 15 15 18 18

## 6 0 0 0 0 0 0 0 0

## X2.26.20 X2.27.20 X2.28.20 X2.29.20 X3.1.20 X3.2.20 X3.3.20 X3.4.20

## 1 22 22 28 28 28 31 31 31

## 2 22 22 22 32 32 32 43 43

## 3 62 62 62 72 72 78 78 78

## 4 1 1 1 1 1 1 1 1

## 5 18 18 18 18 18 18 22 22

## 6 0 3 3 3 3 3 3 3

## X3.5.20 X3.6.20 X3.7.20 X3.8.20 X3.9.20 X3.10.20 X3.11.20 X3.12.20

## 1 31 31 31 31 31 33 34 34

## 2 43 46 76 76 76 101 118 118

## 3 78 78 78 78 78 78 96 96

## 4 1 1 1 1 1 1 1 1

## 5 22 22 23 24 24 24 26 26

## 6 3 3 4 4 4 4 4 4

## X3.13.20 X3.14.20 X3.15.20 X3.16.20 X3.17.20 X3.18.20 X3.19.20

## 1 35 35 35 35 41 42 42

## 2 118 118 118 144 144 144 150

## 3 97 105 105 109 114 114 114

## 4 1 1 1 1 1 1 1

## 5 26 35 42 42 49 60 75

## 6 4 4 4 4 4 4 4

Identification of the Distribution

# total cases of different catagories on the daily basis

dailyConfirmed = apply(check.Confirmed[,5:ncol(check.Confirmed)], 2, sum)

dailyDeaths = apply(check.Deaths[,5:ncol(check.Deaths)], 2, sum)

dailyRecovered = apply(check.Recovered[,5:ncol(check.Recovered)], 2, sum)

# active cases

dailyActiveCases = dailyConfirmed - (dailyDeaths + dailyRecovered)

# day count till March 21st

days = 1:length(dailyConfirmed)trendDF = data.frame(

Day = days,

Confirmed = dailyConfirmed,

Deaths = dailyDeaths,

Recovered = dailyRecovered,

Active.Cases = dailyActiveCases)

head(trendDF, 5)## Day Confirmed Deaths Recovered Active.Cases

## X1.22.20 1 555 17 28 510

## X1.23.20 2 654 18 30 606

## X1.24.20 3 941 26 36 879

## X1.25.20 4 1434 42 39 1353

## X1.26.20 5 2118 56 52 2010Visualizing this trend

options(repr.plot.width=12, repr.plot.height=10)

ggplot(trendDF, aes(x = Day, y = Active.Cases)) +

# Active cases in LIGHTBLUE

geom_point(color = "lightblue") +

geom_line(color = "lightblue") +

# Confirmed Cases - RED

geom_point(aes(y = Confirmed), color = "red") +

geom_line(aes(y = Confirmed), color = "red") +

# Death Cases - BLACK

geom_point(aes(y = Deaths), color = "black") +

geom_line(aes(y = Deaths), color = "black") +

# Recovered Cases - GREEN

geom_point(aes(y = Recovered), color = "green") +

geom_line(aes(y = Recovered), color = "green") +

labs(x="Days Count", y="Cases Count") +

theme_classic() +

theme(

text = element_text(family = "Gill Sans")

,plot.title = element_text(size = 20, face = "bold", hjust = 0.5)

,plot.subtitle = element_text(size = 25, family = "Courier", face = "bold", hjust = 0.5)

,axis.text = element_text(size = 12)

,axis.title = element_text(size = 20)

)Here we see that all the main three catagories of cases have kept increasing with days!

allConfirmed = as.numeric(check.Confirmed[,ncol(check.Confirmed)])

allDeaths = as.numeric(check.Deaths[,ncol(check.Deaths)])

allRecoveries = as.numeric(check.Recovered[,ncol(check.Recovered)])Checking for the mean of Active Cases till 21st March:

# finding the mean of each catagories

meanConfirmed = mean(allConfirmed)

meanDeaths = mean(allDeaths)

meanRecovered = mean(allRecoveries)

meanConfirmed## [1] 519.7308## [1] 21.14316## [1] 181.3953This indicates that:

meanActiveCases = \({\mu}\) = 374.9399142

Calculating 1/\({\mu}\) using following equation:

\({\lambda}\) = 1/\({\mu}\)

## [1] 0.001924073Here we see that:

\({\lambda}\) > 0

The same thing is also true for rest of the two catagories.

As per our studies till now:

We find that- at a lot extent, the ternd follows an Exponential Distribution, as:

- The Cases are increasing with the Days

- \({\lambda}\) > 0 etc…

It becomes clear as we look onto the main properties of an Exponential Distribution:

- \({\lambda}\) > 0

- mean = \({\mu}\) = 1/\({\lambda}\)

- x-axis (corresponding to Days) belongs to the [ 0, \({\infty}\) )

- y value increases with x

So, we have the data distributed Exponentially

Although, we see that trend of active cases do NOT always increase, and hence, it is not distributed exponentially:

ggplot(trendDF, aes(x = Day, y = Active.Cases)) +

# Active cases in BLUE

geom_point(color = "blue") +

geom_line(color = "blue") +

theme_classic()So, we’ll have to do modelling that we wiil do later on.

* Data Reduction

Though we have cleaned the dataset, yet we see that ‘Diamond Princess’ Cruise is still therein among the countries’ data.

Hence, it’s an outlier, and hence, has to be separated.

So, now we’ll start the process of data reduction

# removing diamond princess

Diamond.Princess.Confirmed = check.Confirmed[ which(str_detect(check.Confirmed$Country.Region, "Cruise Ship", negate = F)), ]

check.Confirmed = check.Confirmed[ which(str_detect(check.Confirmed$Country.Region, "Cruise Ship", negate = T)), ]

Diamond.Princess.Deaths = check.Deaths[ which(str_detect(check.Deaths$Country.Region, "Cruise Ship", negate = F)),]

check.Deaths = check.Deaths[ which(str_detect(check.Deaths$Country.Region, "Cruise Ship", negate = T)), ]

Diamond.Princess.Recovered = check.Recovered[ which(str_detect(check.Recovered$Country.Region, "Cruise Ship", negate = F)), ]

check.Recovered = check.Recovered[ which(str_detect(check.Recovered$Country.Region, "Cruise Ship", negate = T)), ]

## Rectifying Row sequences

row.names(check.Confirmed) <- NULL

row.names(check.Deaths) <- NULL

row.names(check.Recovered) <- NULLVerifying if it is removed or not:

## Province.State Country.Region Lat Long X1.22.20 X1.23.20

## 166 Jiangsu China 32.9711 119.455 1 5

## X1.24.20 X1.25.20 X1.26.20 X1.27.20 X1.28.20 X1.29.20 X1.30.20

## 166 9 18 33 47 70 99 129

## X1.31.20 X2.1.20 X2.2.20 X2.3.20 X2.4.20 X2.5.20 X2.6.20 X2.7.20

## 166 168 202 236 271 308 341 373 408

## X2.8.20 X2.9.20 X2.10.20 X2.11.20 X2.12.20 X2.13.20 X2.14.20 X2.15.20

## 166 439 468 492 515 543 570 593 604

## X2.16.20 X2.17.20 X2.18.20 X2.19.20 X2.20.20 X2.21.20 X2.22.20

## 166 617 626 629 631 631 631 631

## X2.23.20 X2.24.20 X2.25.20 X2.26.20 X2.27.20 X2.28.20 X2.29.20 X3.1.20

## 166 631 631 631 631 631 631 631 631

## X3.2.20 X3.3.20 X3.4.20 X3.5.20 X3.6.20 X3.7.20 X3.8.20 X3.9.20

## 166 631 631 631 631 631 631 631 631

## X3.10.20 X3.11.20 X3.12.20 X3.13.20 X3.14.20 X3.15.20 X3.16.20

## 166 631 631 631 631 631 631 631

## X3.17.20 X3.18.20 X3.19.20

## 166 631 631 631#check.Deaths[166,]

#check.Recovered[166,]

# also checking dimention

cat("\nEarlier dimention: 468 X 62\n\n") # as we saw initially##

## Earlier dimention: 468 X 62## New dimention: 467 62OK! So it’s gone!

Scaling

Now we have the dataset that hold the counts of the COVID-19 cases of different geographical locations.

Hence, we can now create a dataset to generate the map for every unique day.(that we saw early in this project)

- It means, we want to plot all the countries/regions that are affected on a particular day

- It gives us an idea that - among all the given countries, either we are going to plot a selected country on world-map, or not, for a specific day

the factor on which basis we’ll be deciding is - whether country has any confirmed case till that day or not

So, We’d also need the Latitude and Longitude position for those country

Finally, we can roughly estimate that we can have only 2 choices for any region, say 0 & 1, such that:

0: don’t plot on map, if it has no (total) confirm cases

1: plot on map, if it has some confirm cases i.e. there is at least 1 confirm case on that day

Therefore, We’re going to use Unit Scaling to set all the values from 5th to last column

# in UNIT SCALING, all the data has either 0 or 1 value

ever.Affected = check.Confirmed

# Unit scaling

for (i in row.names(ever.Affected)) {

for (j in 5:ncol(ever.Affected)) {

if(ever.Affected[i,j] != 0)

ever.Affected[i,j] = 1

}

}

head(ever.Affected)## Province.State Country.Region Lat Long X1.22.20 X1.23.20

## 1 Others Thailand 15.0000 101.0000 1 1

## 2 Others Japan 36.0000 138.0000 1 1

## 3 Others Singapore 1.2833 103.8333 0 1

## 4 Others Nepal 28.1667 84.2500 0 0

## 5 Others Malaysia 2.5000 112.5000 0 0

## 6 British Columbia Canada 49.2827 -123.1207 0 0

## X1.24.20 X1.25.20 X1.26.20 X1.27.20 X1.28.20 X1.29.20 X1.30.20 X1.31.20

## 1 1 1 1 1 1 1 1 1

## 2 1 1 1 1 1 1 1 1

## 3 1 1 1 1 1 1 1 1

## 4 0 1 1 1 1 1 1 1

## 5 0 1 1 1 1 1 1 1

## 6 0 0 0 0 1 1 1 1

## X2.1.20 X2.2.20 X2.3.20 X2.4.20 X2.5.20 X2.6.20 X2.7.20 X2.8.20 X2.9.20

## 1 1 1 1 1 1 1 1 1 1

## 2 1 1 1 1 1 1 1 1 1

## 3 1 1 1 1 1 1 1 1 1

## 4 1 1 1 1 1 1 1 1 1

## 5 1 1 1 1 1 1 1 1 1

## 6 1 1 1 1 1 1 1 1 1

## X2.10.20 X2.11.20 X2.12.20 X2.13.20 X2.14.20 X2.15.20 X2.16.20 X2.17.20

## 1 1 1 1 1 1 1 1 1

## 2 1 1 1 1 1 1 1 1

## 3 1 1 1 1 1 1 1 1

## 4 1 1 1 1 1 1 1 1

## 5 1 1 1 1 1 1 1 1

## 6 1 1 1 1 1 1 1 1

## X2.18.20 X2.19.20 X2.20.20 X2.21.20 X2.22.20 X2.23.20 X2.24.20 X2.25.20

## 1 1 1 1 1 1 1 1 1

## 2 1 1 1 1 1 1 1 1

## 3 1 1 1 1 1 1 1 1

## 4 1 1 1 1 1 1 1 1

## 5 1 1 1 1 1 1 1 1

## 6 1 1 1 1 1 1 1 1

## X2.26.20 X2.27.20 X2.28.20 X2.29.20 X3.1.20 X3.2.20 X3.3.20 X3.4.20

## 1 1 1 1 1 1 1 1 1

## 2 1 1 1 1 1 1 1 1

## 3 1 1 1 1 1 1 1 1

## 4 1 1 1 1 1 1 1 1

## 5 1 1 1 1 1 1 1 1

## 6 1 1 1 1 1 1 1 1

## X3.5.20 X3.6.20 X3.7.20 X3.8.20 X3.9.20 X3.10.20 X3.11.20 X3.12.20

## 1 1 1 1 1 1 1 1 1

## 2 1 1 1 1 1 1 1 1

## 3 1 1 1 1 1 1 1 1

## 4 1 1 1 1 1 1 1 1

## 5 1 1 1 1 1 1 1 1

## 6 1 1 1 1 1 1 1 1

## X3.13.20 X3.14.20 X3.15.20 X3.16.20 X3.17.20 X3.18.20 X3.19.20

## 1 1 1 1 1 1 1 1

## 2 1 1 1 1 1 1 1

## 3 1 1 1 1 1 1 1

## 4 1 1 1 1 1 1 1

## 5 1 1 1 1 1 1 1

## 6 1 1 1 1 1 1 1Next step is to find and remove the outliers:

We’ll use scatter plots & box plots to identify and compare the MEAN of every day to verify these outliers, so that we can remove them, successfully.

# Let's visualize our data to varify:

options(repr.plot.width=12, repr.plot.height=10)

ggplot(check.Confirmed) +

geom_point(aes(x=check.Confirmed$Province.State, y=check.Confirmed$X1.29.20), color="red", size=2) +

theme(

text = element_text(family = "Gill Sans")

,plot.title = element_text(size = 20, face = "bold", hjust = 0.5)

,plot.subtitle = element_text(size = 25, family = "Courier", face = "bold", hjust = 0.5)

,axis.text = element_text(size = 12)

,axis.title = element_text(size = 20)

,axis.text.x = element_blank()

)#ggplot(check.Deaths) +

# geom_point(aes(x=check.Deaths$Province.State, y=check.Deaths$X1.29.20), color="red", size=2)#

#ggplot(check.Recovered) +

# geom_point(aes(x=check.Recovered$Province.State, y=check.Recovered$X1.29.20), color="red", size=2)# Let's find the name of this outlier:-

check.Confirmed[which(check.Confirmed$X1.29.20 > 400), c("Province.State", "Country.Region")]## Province.State Country.Region

## 155 Hubei China#check.Deaths[which(check.Deaths$X1.29.20 > 15), c("Province.State", "Country.Region")]

#check.Recovered[which(check.Recovered$X1.29.20 > 20), c("Province.State", "Country.Region")]So, it’s Hubei.

We’ll verify it by comparison of mean value on daily basis, including and excluding the Hubei province.

# Here we are trying to compare the mean values of everyday, including and excluding Hubei province

With.Hubei = as.numeric(apply(check.Confirmed[,5:ncol(check.Confirmed)], 2, mean))

exceptHubei = check.Confirmed[ which(str_detect(check.Confirmed$Province.State, "Hubei", negate = T)), ]

Without.Hubei = as.numeric(apply(exceptHubei[,5:ncol(exceptHubei)], 2, mean))

# creating a dataframe for comperision

Mean.Comparison.Table = data.frame(

"Date" = as.character(colnames(check.Confirmed)[5:ncol(check.Confirmed)]),

"With Hubei" = c(With.Hubei),

"Without Hubei" = c(Without.Hubei))

tail(Mean.Comparison.Table, 10)## Date With.Hubei Without.Hubei

## 49 X3.10.20 253.5824 108.7189

## 50 X3.11.20 269.1563 124.2983

## 51 X3.12.20 274.4625 129.5987

## 52 X3.13.20 310.5567 165.7597

## 53 X3.14.20 333.8865 189.1309

## 54 X3.15.20 358.1949 213.4828

## 55 X3.16.20 388.4154 243.7597

## 56 X3.17.20 421.7794 277.1931

## 57 X3.18.20 459.9315 315.4249

## 58 X3.19.20 519.3191 374.9399So it’s clear that the Hubei is the outlier!

# let's remove Hubei from our dataset:

Hubei.Confirmed = check.Confirmed[ which(str_detect(check.Confirmed$Province.State, "Hubei", negate = F)), ]

check.Confirmed = check.Confirmed[ which(str_detect(check.Confirmed$Province.State, "Hubei", negate = T)), ]

Hubei.Deaths = check.Deaths[ which(str_detect(check.Deaths$Province.State, "Hubei", negate = F)),]

check.Deaths = check.Deaths[ which(str_detect(check.Deaths$Province.State, "Hubei", negate = T)), ]

Hubei.Recovered = check.Recovered[ which(str_detect(check.Recovered$Province.State, "Hubei", negate = F)), ]

check.Recovered = check.Recovered[ which(str_detect(check.Recovered$Province.State, "Hubei", negate = T)), ]

## Rectifying Row sequences

row.names(check.Confirmed) <- NULL

row.names(check.Deaths) <- NULL

row.names(check.Recovered) <- NULL## Province.State Country.Region Lat Long X1.22.20 X1.23.20

## 155 Hubei China 30.9756 112.2707 444 444

## X1.24.20 X1.25.20 X1.26.20 X1.27.20 X1.28.20 X1.29.20 X1.30.20

## 155 549 761 1058 1423 3554 3554 4903

## X1.31.20 X2.1.20 X2.2.20 X2.3.20 X2.4.20 X2.5.20 X2.6.20 X2.7.20

## 155 5806 7153 11177 13522 16678 19665 22112 24953

## X2.8.20 X2.9.20 X2.10.20 X2.11.20 X2.12.20 X2.13.20 X2.14.20 X2.15.20

## 155 27100 29631 31728 33366 33366 48206 54406 56249

## X2.16.20 X2.17.20 X2.18.20 X2.19.20 X2.20.20 X2.21.20 X2.22.20

## 155 58182 59989 61682 62031 62442 62662 64084

## X2.23.20 X2.24.20 X2.25.20 X2.26.20 X2.27.20 X2.28.20 X2.29.20 X3.1.20

## 155 64084 64287 64786 65187 65596 65914 66337 66907

## X3.2.20 X3.3.20 X3.4.20 X3.5.20 X3.6.20 X3.7.20 X3.8.20 X3.9.20

## 155 67103 67217 67332 67466 67592 67666 67707 67743

## X3.10.20 X3.11.20 X3.12.20 X3.13.20 X3.14.20 X3.15.20 X3.16.20

## 155 67760 67773 67781 67786 67790 67794 67798

## X3.17.20 X3.18.20 X3.19.20

## 155 67799 67800 67800# Let's check the once dimention more

# also checking dimention

cat("\nEarlier dimention: 467 X 62\n\n") # after removing Cruis Ship##

## Earlier dimention: 467 X 62## New dimention: 466 62# Let's visualize once more

options(repr.plot.width=12, repr.plot.height=10)

ggplot(check.Confirmed) +

geom_point(aes(x=check.Confirmed$Province.State, y=check.Confirmed$X1.29.20), color="red", size=2) +

theme(

text = element_text(family = "Gill Sans")

,plot.title = element_text(size = 20, face = "bold", hjust = 0.5)

,plot.subtitle = element_text(size = 25, family = "Courier", face = "bold", hjust = 0.5)

,axis.text = element_text(size = 12)

,axis.title = element_text(size = 20)

,axis.text.x = element_blank()

)Although now it’s comparatively better, still have some outliers…

# Let's find them out, too:-

check.Confirmed[which(check.Confirmed$X1.29.20 > 100), c("Province.State", "Country.Region")]## Province.State Country.Region

## 158 Guangdong China

## 159 Henan China

## 160 Zhejiang China

## 161 Hunan China

## 162 Anhui China

## 163 Jiangxi China

## 164 Shandong China

## 166 Chongqing China

## 167 Sichuan China

## 170 Beijing China# Checking for mean comperision

With.China = as.numeric(apply(check.Confirmed[,5:ncol(check.Confirmed)], 2, mean))

exceptChina = check.Confirmed[ which(str_detect(check.Confirmed$Country.Region, "China", negate = T)), ]

Without.China = as.numeric(apply(exceptChina[,5:ncol(exceptChina)], 2, mean))

# comperision

Mean.Comparison.Table = data.frame(

"Date" = as.character(colnames(check.Confirmed)[5:ncol(check.Confirmed)]),

"With China" = c(With.China),

"Without China" = c(Without.China))

head(Mean.Comparison.Table, 10)## Date With.China Without.China

## 1 X1.22.20 0.2381974 0.01612903

## 2 X1.23.20 0.4506438 0.02534562

## 3 X1.24.20 0.8412017 0.04838710

## 4 X1.25.20 1.4442060 0.06451613

## 5 X1.26.20 2.2746781 0.09907834

## 6 X1.27.20 3.2274678 0.11520737

## 7 X1.28.20 4.3433476 0.15898618

## 8 X1.29.20 5.6051502 0.18202765

## 9 X1.30.20 7.1480687 0.21428571

## 10 X1.31.20 8.8454936 0.29032258So, while talking about the whole world, the complete mainland of China seems to be the outlier. Although, we’ll have to verify it first.

But because, it’s not a single row, we will perform this action later i.e. during data-transformation.

* Data transformation

# We've already saved the cleaned version of the all the files

# Loading the files in order to transform the dataset(s)

# loading raw data - from source

Confirmed = read.csv("../static/cleaned/time_series_19-covid-Confirmed.csv")

Deaths = read.csv("../static/cleaned/time_series_19-covid-Deaths.csv")

Recovered = read.csv("../static/cleaned/time_series_19-covid-Recovered.csv")

Hubei.Confirmed = read.csv("../static/cleaned/Hubei/time_series_19-covid-Confirmed.csv")

Hubei.Deaths = read.csv("../static/cleaned/Hubei/time_series_19-covid-Deaths.csv")

Hubei.Recovered = read.csv("../static/cleaned/Hubei/time_series_19-covid-Recovered.csv")

Diamond.Princess.Confirmed = read.csv("../static/cleaned/Diamond-Princess/time_series_19-covid-Confirmed.csv")

Diamond.Princess.Deaths = read.csv("../static/cleaned/Diamond-Princess/time_series_19-covid-Deaths.csv")

Diamond.Princess.Recovered = read.csv("../static/cleaned/Diamond-Princess/time_series_19-covid-Recovered.csv")# as known, all of these files have same set of columns,

# the only things that differ are data values in dates' columns

# Let's see any one dataset's structure (as all are similer)

str(Hubei.Recovered)## 'data.frame': 1 obs. of 62 variables:

## $ State : Factor w/ 1 level "Hubei": 1

## $ Country.Region: Factor w/ 1 level "China": 1

## $ Lat : num 31

## $ Long : num 112

## $ X1.22.20 : int 28

## $ X1.23.20 : int 28

## $ X1.24.20 : int 31

## $ X1.25.20 : int 32

## $ X1.26.20 : int 42

## $ X1.27.20 : int 45

## $ X1.28.20 : int 80

## $ X1.29.20 : int 88

## $ X1.30.20 : int 90

## $ X1.31.20 : int 141

## $ X2.1.20 : int 168

## $ X2.2.20 : int 295

## $ X2.3.20 : int 386

## $ X2.4.20 : int 522

## $ X2.5.20 : int 633

## $ X2.6.20 : int 817

## $ X2.7.20 : int 1115

## $ X2.8.20 : int 1439

## $ X2.9.20 : int 1795

## $ X2.10.20 : int 2222

## $ X2.11.20 : int 2639

## $ X2.12.20 : int 2686

## $ X2.13.20 : int 3459

## $ X2.14.20 : int 4774

## $ X2.15.20 : int 5623

## $ X2.16.20 : int 6639

## $ X2.17.20 : int 7862

## $ X2.18.20 : int 9128

## $ X2.19.20 : int 10337

## $ X2.20.20 : int 11788

## $ X2.21.20 : int 11881

## $ X2.22.20 : int 15299

## $ X2.23.20 : int 15343

## $ X2.24.20 : int 16748

## $ X2.25.20 : int 18971

## $ X2.26.20 : int 20969

## $ X2.27.20 : int 23383

## $ X2.28.20 : int 26403

## $ X2.29.20 : int 28993

## $ X3.1.20 : int 31536

## $ X3.2.20 : int 33934

## $ X3.3.20 : int 36208

## $ X3.4.20 : int 38557

## $ X3.5.20 : int 40592

## $ X3.6.20 : int 42033

## $ X3.7.20 : int 43500

## $ X3.8.20 : int 45235

## $ X3.9.20 : int 46488

## $ X3.10.20 : int 47743

## $ X3.11.20 : int 49134

## $ X3.12.20 : int 50318

## $ X3.13.20 : int 51553

## $ X3.14.20 : int 52960

## $ X3.15.20 : int 54288

## $ X3.16.20 : int 55142

## $ X3.17.20 : int 56003

## $ X3.18.20 : int 56927

## $ X3.19.20 : int 57682- Now, recalling the Problem Statement, we aim to find out the status of COVID-19 in China, within next 7 days

- In order to do so, we need to analyse the status of COVID-19 on all the previous days

What would it tell us?

By this, we’d be capable enough to make an estimate by what RATE the Coronavirus is spreading since late January.

Hence, we need to transform the data in order:

- such that rows hold every data Country wise, instead of State wise

- to include a new column “Date” to store aggregate data (of 3 datasets) in a single place

- remove unnecessary columns i.e. States, Latitude & Longitude

Arranging data Country-Wise

Steps:

## Province.State Country.Region Lat Long X1.22.20 X1.23.20

## 461 New Caledonia France -20.9043 165.6180 0 0

## 462 Bermuda United Kingdom 32.3078 -64.7505 0 0

## 463 Others Chad 15.4542 18.7322 0 0

## 464 Others El Salvador 13.7942 -88.8965 0 0

## 465 Others Fiji -17.7134 178.0650 0 0

## 466 Others Nicaragua 12.8654 -85.2072 0 0

## X1.24.20 X1.25.20 X1.26.20 X1.27.20 X1.28.20 X1.29.20 X1.30.20

## 461 0 0 0 0 0 0 0

## 462 0 0 0 0 0 0 0

## 463 0 0 0 0 0 0 0

## 464 0 0 0 0 0 0 0

## 465 0 0 0 0 0 0 0

## 466 0 0 0 0 0 0 0

## X1.31.20 X2.1.20 X2.2.20 X2.3.20 X2.4.20 X2.5.20 X2.6.20 X2.7.20

## 461 0 0 0 0 0 0 0 0

## 462 0 0 0 0 0 0 0 0

## 463 0 0 0 0 0 0 0 0

## 464 0 0 0 0 0 0 0 0

## 465 0 0 0 0 0 0 0 0

## 466 0 0 0 0 0 0 0 0

## X2.8.20 X2.9.20 X2.10.20 X2.11.20 X2.12.20 X2.13.20 X2.14.20 X2.15.20

## 461 0 0 0 0 0 0 0 0

## 462 0 0 0 0 0 0 0 0

## 463 0 0 0 0 0 0 0 0

## 464 0 0 0 0 0 0 0 0

## 465 0 0 0 0 0 0 0 0

## 466 0 0 0 0 0 0 0 0

## X2.16.20 X2.17.20 X2.18.20 X2.19.20 X2.20.20 X2.21.20 X2.22.20

## 461 0 0 0 0 0 0 0

## 462 0 0 0 0 0 0 0

## 463 0 0 0 0 0 0 0

## 464 0 0 0 0 0 0 0

## 465 0 0 0 0 0 0 0

## 466 0 0 0 0 0 0 0

## X2.23.20 X2.24.20 X2.25.20 X2.26.20 X2.27.20 X2.28.20 X2.29.20 X3.1.20

## 461 0 0 0 0 0 0 0 0

## 462 0 0 0 0 0 0 0 0

## 463 0 0 0 0 0 0 0 0

## 464 0 0 0 0 0 0 0 0

## 465 0 0 0 0 0 0 0 0

## 466 0 0 0 0 0 0 0 0

## X3.2.20 X3.3.20 X3.4.20 X3.5.20 X3.6.20 X3.7.20 X3.8.20 X3.9.20

## 461 0 0 0 0 0 0 0 0

## 462 0 0 0 0 0 0 0 0

## 463 0 0 0 0 0 0 0 0

## 464 0 0 0 0 0 0 0 0

## 465 0 0 0 0 0 0 0 0

## 466 0 0 0 0 0 0 0 0

## X3.10.20 X3.11.20 X3.12.20 X3.13.20 X3.14.20 X3.15.20 X3.16.20

## 461 0 0 0 0 0 0 0

## 462 0 0 0 0 0 0 0

## 463 0 0 0 0 0 0 0

## 464 0 0 0 0 0 0 0

## 465 0 0 0 0 0 0 0

## 466 0 0 0 0 0 0 0

## X3.17.20 X3.18.20 X3.19.20

## 461 0 0 2

## 462 0 0 2

## 463 0 0 1

## 464 0 0 1

## 465 0 0 1

## 466 0 0 1# Most of the states' name is not identified

unknown = nrow(Recovered[which(str_detect(Recovered$Province.State, "Others")),])

cat(unknown, "/", nrow(Recovered), " States are NOT identified")## 146 / 466 States are NOT identified# Ultimatly, any precaution/cure or action is more likely be taken onto the country level, rather than the individual state, as it's the case of a severe Epidemic

# Only then it would be much easier for us to make any possible estimate for the world as well, due to not having really a huge data about each and every single state of the countries.As we know that Country column is a Factor, we can easily list those countries’, who have reported Confirmed cases (on the daily basis):

Countries = levels(Confirmed$Country.Region)

cat("\nTotal number of affected countries: ", nlevels(Confirmed$Country.Region), "\n\n\nCountries:", head(as.matrix(Countries), 5) )##

## Total number of affected countries: 153

##

##

## Countries: Afghanistan Albania Algeria Andorra Antigua and Barbuda## Functions for extracting required data

# finds the total cases reported in given country

# (by Adding all the data of different states in it)

country.aggregate.daily <- function(dfName, country) {

df <- get(dfName)

df = df[which(str_detect(df$Country.Region, country)),]

df = cbind(States = df[,1], Country = df[,2], df[,5:ncol(df)]) # ELEMINATING LATITUDE/LONGITUDE Col.

row.names(df) <- NULL

temp = df # all states' data of a country

df = temp[1,]

df[3:ncol(temp)] = apply( temp[,3:ncol(temp)],

2,

sum

) # applying sum of all the states' values

df = df[2:ncol(df)] # removing column 'States'

row.names(df) <- NULL

return(df)

}

# generated a dataframe having required data arranged Country-Wise

# (by appending every single country's data)

countries.daily <- function(dfName, cList) {

n = length(cList) # number of countries

flag = 0

for (i in cList) {

if(flag == 0) {

df = country.aggregate.daily(dfName, i)

flag = 1

} else {

temp = country.aggregate.daily(dfName, i)

df = rbind(df, temp)

}

}

row.names(df) <- NULL

return(df)

}China.Confirmed = country.aggregate.daily("Confirmed", "China")

World.Confirmed = countries.daily("Confirmed", Countries)

China.Confirmed## Country X1.22.20 X1.23.20 X1.24.20 X1.25.20 X1.26.20 X1.27.20 X1.28.20

## 1 China 104 199 371 645 1017 1454 1955

## X1.29.20 X1.30.20 X1.31.20 X2.1.20 X2.2.20 X2.3.20 X2.4.20 X2.5.20

## 1 2533 3238 3996 4738 5453 6194 7029 7775

## X2.6.20 X2.7.20 X2.8.20 X2.9.20 X2.10.20 X2.11.20 X2.12.20 X2.13.20

## 1 8475 9157 9714 10198 10626 11020 11393 11689

## X2.14.20 X2.15.20 X2.16.20 X2.17.20 X2.18.20 X2.19.20 X2.20.20 X2.21.20

## 1 11952 12164 12331 12445 12529 12588 12635 12888

## X2.22.20 X2.23.20 X2.24.20 X2.25.20 X2.26.20 X2.27.20 X2.28.20 X2.29.20

## 1 12917 12938 12954 12968 12979 13004 13014 13019

## X3.1.20 X3.2.20 X3.3.20 X3.4.20 X3.5.20 X3.6.20 X3.7.20 X3.8.20 X3.9.20

## 1 13025 13033 13044 13054 13071 13098 13104 13116 13117

## X3.10.20 X3.11.20 X3.12.20 X3.13.20 X3.14.20 X3.15.20 X3.16.20 X3.17.20

## 1 13127 13148 13151 13159 13187 13209 13235 13259

## X3.18.20 X3.19.20

## 1 13303 13357## Country X1.22.20 X1.23.20 X1.24.20 X1.25.20 X1.26.20

## 1 Afghanistan 0 0 0 0 0

## 2 Albania 0 0 0 0 0

## 3 Algeria 0 0 0 0 0

## 4 Andorra 0 0 0 0 0

## 5 Antigua and Barbuda 0 0 0 0 0

## 6 Argentina 0 0 0 0 0

## X1.27.20 X1.28.20 X1.29.20 X1.30.20 X1.31.20 X2.1.20 X2.2.20 X2.3.20

## 1 0 0 0 0 0 0 0 0

## 2 0 0 0 0 0 0 0 0

## 3 0 0 0 0 0 0 0 0

## 4 0 0 0 0 0 0 0 0

## 5 0 0 0 0 0 0 0 0

## 6 0 0 0 0 0 0 0 0

## X2.4.20 X2.5.20 X2.6.20 X2.7.20 X2.8.20 X2.9.20 X2.10.20 X2.11.20

## 1 0 0 0 0 0 0 0 0

## 2 0 0 0 0 0 0 0 0

## 3 0 0 0 0 0 0 0 0

## 4 0 0 0 0 0 0 0 0

## 5 0 0 0 0 0 0 0 0

## 6 0 0 0 0 0 0 0 0

## X2.12.20 X2.13.20 X2.14.20 X2.15.20 X2.16.20 X2.17.20 X2.18.20 X2.19.20

## 1 0 0 0 0 0 0 0 0

## 2 0 0 0 0 0 0 0 0

## 3 0 0 0 0 0 0 0 0

## 4 0 0 0 0 0 0 0 0

## 5 0 0 0 0 0 0 0 0

## 6 0 0 0 0 0 0 0 0

## X2.20.20 X2.21.20 X2.22.20 X2.23.20 X2.24.20 X2.25.20 X2.26.20 X2.27.20

## 1 0 0 0 0 1 1 1 1

## 2 0 0 0 0 0 0 0 0

## 3 0 0 0 0 0 1 1 1

## 4 0 0 0 0 0 0 0 0

## 5 0 0 0 0 0 0 0 0

## 6 0 0 0 0 0 0 0 0

## X2.28.20 X2.29.20 X3.1.20 X3.2.20 X3.3.20 X3.4.20 X3.5.20 X3.6.20

## 1 1 1 1 1 1 1 1 1

## 2 0 0 0 0 0 0 0 0

## 3 1 1 1 3 5 12 12 17

## 4 0 0 0 1 1 1 1 1

## 5 0 0 0 0 0 0 0 0

## 6 0 0 0 0 1 1 1 2

## X3.7.20 X3.8.20 X3.9.20 X3.10.20 X3.11.20 X3.12.20 X3.13.20 X3.14.20

## 1 1 4 4 5 7 7 7 11

## 2 0 0 2 10 12 23 33 38

## 3 17 19 20 20 20 24 26 37

## 4 1 1 1 1 1 1 1 1

## 5 0 0 0 0 0 0 1 1

## 6 8 12 12 17 19 19 31 34

## X3.15.20 X3.16.20 X3.17.20 X3.18.20 X3.19.20

## 1 16 21 22 22 22

## 2 42 51 55 59 64

## 3 48 54 60 74 87

## 4 1 2 39 39 53

## 5 1 1 1 1 1

## 6 45 56 68 79 97Moving to the next step

We need date wise data:

It’s so because, we aim to analyse data on the daily basis > Hence, we’d have to add another column “Date” or simply “Day” (to hold day-> 1, 2…)

in order to do so, we’d have to transform our data into Cross-sectional (China, Hubei & Diamond Princess) or Pooled data (Countries of world other than China)

Let’s understand what a Cross-sectional & a Pooled data is:

- Cross-sectional data: Data of one or more variables, collected at the same point in time.

- Pooled data: A combination of time series data and cross-sectional data.

## Functions

countries.daily.bulk.summary = function(cList) { # date wise country data

# structure of resulting dataset (initially blank)

df <- data.frame(

Country = NULL,

Day = NULL, # day no.

Date = NULL,

Confirmed = NULL,

Deaths = NULL,

Recovered = NULL

)

# calculating all countries' data (date wise) through iteration

for(i in cList) {

this.one.confirmed = country.aggregate.daily("Confirmed", i)

this.one.deaths = country.aggregate.daily("Deaths", i)

this.one.recovered = country.aggregate.daily("Recovered", i)

times = ncol(this.one.confirmed)-1 # no. of days

day = 1:times

d = as.Date("21-01-2020", format(c("%d-%m-%Y")))

date = as.character((day + d), format(c("%d-%m-%Y"))) # its lenngth is equal to --> no. of days

date = factor(c(date), levels = date)

#max(Deaths.temp[1,5:ncol(Deaths.temp)])

confirmed = as.numeric(this.one.confirmed[1,2:ncol(this.one.confirmed)])

deaths = as.numeric(this.one.deaths[1,2:ncol(this.one.deaths)])

recovered = as.numeric(this.one.recovered[1,2:ncol(this.one.recovered)])

dataset <- data.frame(

Country = rep(i, times),

Day = factor(c(1:length(date)), levels = 1:length(date)),

Date = date,

Confirmed = confirmed,

Deaths = deaths,

Recovered = recovered

)

# joining this country

df = rbind(df, dataset)

}

return(df)

}## Country Day Date Confirmed Deaths Recovered

## 1 Afghanistan 1 22-01-2020 0 0 0

## 2 Afghanistan 2 23-01-2020 0 0 0

## 3 Afghanistan 3 24-01-2020 0 0 0

## 4 Afghanistan 4 25-01-2020 0 0 0

## 5 Afghanistan 5 26-01-2020 0 0 0

## 6 Afghanistan 6 27-01-2020 0 0 0For better analysis, let’s add 2 more columns:

1. Closed.Cases = consists all cases, that are Expired or Recovered

2. Active.Cases = cases that are neither Expired nor Recovered

bulk$Active.Cases = bulk$Confirmed - (bulk$Deaths + bulk$Recovered)

bulk$Closed.Cases = bulk$Deaths + bulk$Recovered

tail(bulk)## Country Day Date Confirmed Deaths Recovered Active.Cases

## 8869 Zambia 53 14-03-2020 0 0 0 0

## 8870 Zambia 54 15-03-2020 0 0 0 0

## 8871 Zambia 55 16-03-2020 0 0 0 0

## 8872 Zambia 56 17-03-2020 0 0 0 0

## 8873 Zambia 57 18-03-2020 2 0 0 2

## 8874 Zambia 58 19-03-2020 2 0 0 2

## Closed.Cases

## 8869 0

## 8870 0

## 8871 0

## 8872 0

## 8873 0

## 8874 0

So, our Pooled dataset is ready.

Let’s understand this dataset:-

## 'data.frame': 8874 obs. of 8 variables:

## $ Country : Factor w/ 153 levels "Afghanistan",..: 1 1 1 1 1 1 1 1 1 1 ...

## $ Day : Factor w/ 58 levels "1","2","3","4",..: 1 2 3 4 5 6 7 8 9 10 ...

## $ Date : Factor w/ 58 levels "22-01-2020","23-01-2020",..: 1 2 3 4 5 6 7 8 9 10 ...

## $ Confirmed : num 0 0 0 0 0 0 0 0 0 0 ...

## $ Deaths : num 0 0 0 0 0 0 0 0 0 0 ...

## $ Recovered : num 0 0 0 0 0 0 0 0 0 0 ...

## $ Active.Cases: num 0 0 0 0 0 0 0 0 0 0 ...

## $ Closed.Cases: num 0 0 0 0 0 0 0 0 0 0 ...Explanation of Pooled Datasets (bulk & four)

* Pooled data is a combination of time series data and cross-sectional data

Number of columns: 8

Here we are discussing the Bulk dataset

Country:

- Datatype: Factor with 153-levels

- Holds the name of Countries for daily data

- Eg.: Japan

Day:

- Datatype: Factor with 58-levels

- Holds days numbered from 1 up to the last day

- Eg.: for Jan 22nd, Day is 1, Jan 23rd, Day is 2 and so on..

Date:

- Datatype: Factor with 58-levels

- Holds dates in format dd-mm-yyyy and where individual level has the datatype Date

- Eg.: 22-01-2020

Confirmed:

- Datatype: num

- Holds total number of confirm cases in a country, up to the given date/day

- Eg.: up to 01-02-2020, Japan reported 20 COVID-19 cases

Deaths:

- Datatype: num

- Holds total number of deaths in a country, up to the given date/day

- Eg.: up to 01-02-2020, Japan reported no Deaths

Recovered:

- Datatype: num

- Holds total number of recoveries in a county, up to the given date/day

- Eg.: up to 01-02-2020, Japan reported 1 Recoveries

Active.Cases:

- Datatype: num

- Holds total Confirmed cases, except Deaths & Recoveries in a country, up to the given date/day

- Eg.: up to 01-02-2020, Japan had 19 Active cases

Closed.Cases:

- Datatype: num

- Holds total number of Recoveries or Deaths in a country, up to the given date/day

- Eg.: up to 01-02-2020, Japan had closed 1 COVID-19 case

Now we are all set to filter out China from this dataset!

# filtering out the China

China.dataset = bulk[which(str_detect(bulk$Country, 'China')),]

# World Pooled dataset (except china)

bulk = bulk[which(str_detect(bulk$Country, 'China', negate=T)),] # updating bulk itself## Country Day Date Confirmed Deaths Recovered Active.Cases

## 1741 China 1 22-01-2020 104 0 0 104

## 1742 China 2 23-01-2020 199 1 2 196

## 1743 China 3 24-01-2020 371 2 5 364

## 1744 China 4 25-01-2020 645 2 7 636

## 1745 China 5 26-01-2020 1017 4 7 1006

## 1746 China 6 27-01-2020 1454 6 13 1435

## Closed.Cases

## 1741 0

## 1742 3

## 1743 7

## 1744 9

## 1745 11

## 1746 19In the same manner, we create two datasets:

- holds all the data of all the countries except Hubei in China

- holds whole data categorized into four locations.

These four locations are:

- Diamond Princess

- Hubei province (alone) same as Diamond Princess Cruise Ship

- China alone data (Except Hubei province)

- World (Except China), collectively

The 2nd type of dataset is very necessary because it consists of all the outliers as well…

* Actually, here we can take them into consideration because:

- Here we are comparing them with the whole World’s data collectively

- It’s that kind of MEDICAL Data, where outliers can not be ignored! In-fact this single country and that ship are spreading the disease, rapidly.

- This 2nd dataset alone keeps track on the whole data, reported till the last date

- We’ve already saved this dataset

## Load both date wise-datasets (world & FOUR)

# includes data of all the countries

all = read.csv('../static/pooled/countryWise_bulk_summary.csv')

# includes data of four majour location

four = read.csv('../static/pooled/Four_dataset_locationWise.csv')## 'data.frame': 8874 obs. of 8 variables:

## $ Country : Factor w/ 153 levels "Afghanistan",..: 1 1 1 1 1 1 1 1 1 1 ...

## $ Day : int 1 2 3 4 5 6 7 8 9 10 ...

## $ Date : Factor w/ 58 levels "01-02-2020","01-03-2020",..: 41 43 45 47 49 51 53 55 57 58 ...

## $ Confirmed : int 0 0 0 0 0 0 0 0 0 0 ...

## $ Deaths : int 0 0 0 0 0 0 0 0 0 0 ...

## $ Recovered : int 0 0 0 0 0 0 0 0 0 0 ...

## $ Active.Cases: int 0 0 0 0 0 0 0 0 0 0 ...

## $ Closed.Cases: int 0 0 0 0 0 0 0 0 0 0 ...## 'data.frame': 232 obs. of 8 variables:

## $ Location : Factor w/ 4 levels "China","Diamond Princess",..: 3 3 3 3 3 3 3 3 3 3 ...

## $ Day : int 1 2 3 4 5 6 7 8 9 10 ...

## $ Date : Factor w/ 58 levels "01-02-2020","01-03-2020",..: 41 43 45 47 49 51 53 55 57 58 ...

## $ Confirmed : int 444 444 549 761 1058 1423 3554 3554 4903 5806 ...

## $ Deaths : int 17 17 24 40 52 76 125 125 162 204 ...

## $ Recovered : int 28 28 31 32 42 45 80 88 90 141 ...

## $ Active.Cases: int 399 399 494 689 964 1302 3349 3341 4651 5461 ...

## $ Closed.Cases: int 45 45 55 72 94 121 205 213 252 345 ...

In the all dataset, everything is same as in ‘Bulk’ dataset

In the four dataset:

Location:

- Datatype: Factor with 4-levels

- Holds the name of Locations (as Countries in ‘Bulk’) for daily data

- Levels: World, China, Hubei & Diamond Princess

Rest 7 columns are same as those of ‘Bulk’ dataset

Let’s analyse that how China differ from rest of the data using Boxplots

Why Boxplot:-

- It’s a single visualization that tells about many statistical quantifiers

- It’s very easy to detect Outliers through boxplot

# Initially we plot dataset with majour Locations

options(repr.plot.width=12, repr.plot.height=10)

withChina<-ggplot(four, aes(x=Day, y=Confirmed, color=Day)) +

geom_boxplot(aes(group=Day)) +

labs(title="Including China") +

theme_classic() +

theme(

text = element_text(family = "Gill Sans")

,plot.title = element_text(size = 20, face = "bold", hjust = 0.5)

,plot.subtitle = element_text(size = 25, family = "Courier", face = "bold", hjust = 0.5)

,axis.text = element_text(size = 12)

,axis.title = element_text(size = 20)

)

cat("\n\n")

Here we get a continuous sequence of outliers, for roughly up to 45 days

Now, as per our previous analysis (through word-clouds and mean-comparison, we assumed the China as this outlier)

In order to Test our hypothesis, let’s plot China alone, as well as Rest of all data except China

options(repr.plot.width=12, repr.plot.height=10)

chinaAlone <- ggplot(four[which(str_detect(four$Location, "China", negate=F)),], aes(x=Day, y=Confirmed, color=Day)) +

geom_point(aes(group=Day)) +

labs(title="Only China") +

theme_classic() +

theme(

text = element_text(family = "Gill Sans")

,plot.title = element_text(size = 20, face = "bold", hjust = 0.5)

,plot.subtitle = element_text(size = 25, family = "Courier", face = "bold", hjust = 0.5)

,axis.text = element_text(size = 12)

,axis.title = element_text(size = 20)

)

withoutChina <- ggplot(four[which(str_detect(four$Location, "China", negate=T)),], aes(x=Day, y=Confirmed, color=Day)) +

geom_boxplot(aes(group=Day)) +

labs(title="Excluding China") +

theme_classic() +

theme(

text = element_text(family = "Gill Sans")

,plot.title = element_text(size = 20, face = "bold", hjust = 0.5)

,plot.subtitle = element_text(size = 25, family = "Courier", face = "bold", hjust = 0.5)

,axis.text = element_text(size = 12)

,axis.title = element_text(size = 20)

)

cat("\n\n")Comparing above two plots with our previous single plot of the whole (four) data categorize, collectively:

1. First box plots resembles the sequence, that is far more similar to the Outliers’ sequence 2. Along with this, when we try plotting the whole data again, after removing the China, we find that there is no outlier, at all

So, finally we can say that the China is an outlier, and hence, we’ll study China, separately!

## Country Day Date Confirmed Deaths Recovered Active.Cases

## 1 Afghanistan 1 22-01-2020 0 0 0 0

## 2 Afghanistan 2 23-01-2020 0 0 0 0

## 3 Afghanistan 3 24-01-2020 0 0 0 0

## 4 Afghanistan 4 25-01-2020 0 0 0 0

## 5 Afghanistan 5 26-01-2020 0 0 0 0

## 6 Afghanistan 6 27-01-2020 0 0 0 0

## Closed.Cases

## 1 0

## 2 0

## 3 0

## 4 0

## 5 0

## 6 0## Location Day Date Confirmed Deaths Recovered Active.Cases

## 1 Hubei 1 22-01-2020 444 17 28 399

## 2 Hubei 2 23-01-2020 444 17 28 399

## 3 Hubei 3 24-01-2020 549 24 31 494

## 4 Hubei 4 25-01-2020 761 40 32 689

## 5 Hubei 5 26-01-2020 1058 52 42 964

## 6 Hubei 6 27-01-2020 1423 76 45 1302

## Closed.Cases

## 1 45

## 2 45

## 3 55

## 4 72

## 5 94

## 6 121

Now, as we aim to analyse the status of COVID-19 within next 10 days, which means that we basically want to analyse the active or closed cases within that time duration.

But, as these 2 are just discrete figures and hence, can vary depending upon the Confirmed, Recovery & Death cases

It means all these figures can vary dynamically